Lucid v1 - a world model that does go brrr 🔥🔥🔥 on consumer hardware

Realtime latent world models for minecraft

Humans don’t run on actual code, humans learn to simulate the rules of the world by observing it’s interactions with themselves and the rest of the entities, we could say one of the most important traits of human beings is having a more advanced simulation capability, that’s what we call a world model. One of the biggest challenges in a generative ai, robotics, and almost anything ai is the restricted nature of the simulation capacity they have, solving that allows us to have much stronger, creative & capable models.

This is why over the past month or so I’ve working on this project, I’m calling it Lucid-V1, the goal was making super compressed latent world models that allows for realtime inference on consumer grade hardware, this model is supposed to faithfully emulate minecraft game’s environments & world.

You can try Lucid-v1 here and find the model weights on Hugging Face.

Initial plan was to model video efficiently, but the logistics of handling high-quality video models quickly became overwhelming. Inspired by my collaborator Ollin’s work on a Pokémon World Model, we started discussing smaller, efficient world models that could run on standard hardware. Our conversations led to Lucid’s core idea: a compressed latent space designed for real-time interaction and accurate emulation.

What makes Lucid-v1 special?

DiTs have self-attention and it has a quadratic cost, typical DiTs are trained with a maximum of 16x spatially reduced latents, considering for a minecraft frame we’d need 500+ tokens, and for 2 second game play that’ll come to around 20k tokens, this is a major bottleneck for any realtime application, Lucid gets away with 15 tokens per frame, or about 600 tokens for a 2 second game play! that allows us to inference much much quickly, but also train with much larger batch sizes without the need for large gpu clusters.

A short excerpt of the YOLO science i did below, full technical report will be published soon.

Training the VAE

Training the VAE presented some challenges, especially with such a small latent space, a bit of tuning went into finding the right architecutre/hyper params over a couple of sweeps. But the result was worth it: Lucid can now generate scenes in real-time with minimal computational resources, compressing 2-second gameplay to around 600 tokens, which makes it very ideal for interactive applications.

Training DiT

Initial attempts to replicate the GameNGen approach quickly became intractable with the compute requirement needed to simulate minecraft, as it seems to need much more epochs to learn the open-ended environment better, and early tests showed bad generalization.

The project evolved to training Diffusion Transformers with Diffusion Forcing (Rolling diffusion). Initial experiments with Diffusion Forcing using v-pred proved challenging to optimize and train effectively. Switching to a Rectified Flow version of Diffusion Forcing yielded significantly improved results, producing crisper outputs while maintaining the essential requirement for minimal sampling steps.

Scaling up

Model scaling proved to be a crucial factor in performance. The following experiments demonstrate how different parameter scales of the DiT architecture affect results, while keeping the VAE architecture constant:

100M params model

500M params model

1B params model

tldr;

Scaling Lucid from 50M to 1B parameters revealed significant improvements in performance and realism. The 50M parameter model exhibited divergence issues, while the 100M parameter version demonstrated improved stability. and further into 500M and 1B params results appear to look very close to minecraft game.

My thoughts

I think super compression for constrained worlds is very important, and i think there’s a long way to go to making models work well with features that are specifically trained to match the distribution of the data, GameNGen did an awesome job starting with Stable diffusion, but the approach i worked on scaled much much nicely than what they did, samples below:’ (this is a super short demo of this method with only 100K game play frames, instead of the 900M done by the authors) & 100M params instead of 1B params used by the authors

I have an expectation video models might soon converge into the autoregressive approach with small latents guiding the diffusion of large pixel or latent space patches, I believe the work i’ve done here shows a very clear advantage to this approach (which is better understanding of the scene’s physics, training speed and the KV-Cache that’s made LLMs what they are now)

What’s done today

Released model weights for both VAE and DiT for minecraft

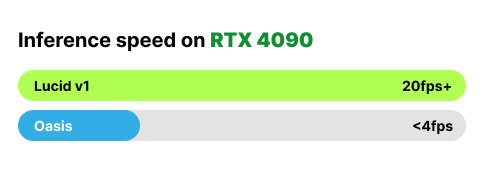

Released Local inference code (With full interactivity and realtime support on 4090)

Released real-time demo for everyone!

This blog :-)

Next steps:

Memory !!!!

Something akin to RNN hidden states would be valuable here

Memory Bank and structuring memory in a certain & differentiable way is also something i’m very interested about

Audio in World Models, I’d like to add audio somewhere here :)

Scaling up for more complex worlds

Finetuning huge video models as world models ? maybe something else?

Foundational World Model + Loras that can be trained super quickly?

PS: This project was started way before the decart release and this project is not a derivative of that, original motivator was the GameNGen paper

PS: Part of the training compute was sponsored by FAL.AI

& a special thanks to: Jack Gallagher, Lucas Nestler, Ethan Smith, Dyllan, Adam Hibble, Simo Ryu, Alberto Hojel & Albi for awesome advices…

Diffusion Forcing: Next-token Prediction Meets Full-Sequence Diffusion by Chen et al., Jul 1, 2024

World Models by David Ha & Jürgen Schmidhuber, Mar 26, 2018.

Game Emulation via Neural Network by Ollin Boer Bohan, Sep 05, 2022.

Scalable Diffusion Models with Transformers by William Peebles & Saining Xie, Dec 19, 2022

Diffusion Models Are Real-Time Game Engines by Valevski et al., Aug 27, 2024